The article was written in collaboration with S. Dobridnyuk, the Director for Research and Innovation, Diasoft Systems. Citation: Gusev A.V., Dobridnyuk S.L. Artificial intelligence in medicine and healthcare // Informacionnoe obshchestvo (Information Society), 2017.-№4-5-С. 78-93

The article was written in collaboration with S. Dobridnyuk, the Director for Research and Innovation, Diasoft Systems. Citation: Gusev A.V., Dobridnyuk S.L. Artificial intelligence in medicine and healthcare // Informacionnoe obshchestvo (Information Society), 2017.-№4-5-С. 78-93

Introduction

Today, artificial intelligence (AI) is considered one of the most promising areas of development not only in the IT industry but in many other areas of human activity. In particular, AI-based solutions are one of the main hopes for the implementation of the Digital Economy.

As electricity led to a new industrial revolution in the 19th century, so artificial intelligence is becoming one of the main drivers of a profound transformation of society and economy in the 21st century. However, unlike previous industrial revolutions, the main driver of these changes is neither technology nor IT. Society itself and its way of life are changing. Computerization is transforming consumer behavior. Consumers, having access to a different quality of information, become more experienced and demanding. With IT, management received high-quality professional tools for monitoring, management, and control. The policy of the state and investors is changing, they no longer want to invest in professions and activities where a routine is inherited from the past and low-skilled manual labor is used. These professionals are being replaced by robots and services based on artificial intelligence.

According to IDC, the market for cognitive systems and AI technologies in 2016 was approximately $ 7.9 billion. Analysts believe that by the end of this decade, the average annual growth rate (CAGR) will be at 54%. As a result, in 2020 the industry will exceed $ 46 billion. The largest share of this market will be cognitive applications that automatically study data and make various estimates, recommendations, or predictions. Investments in AI software platforms that provide tools, technologies, and services based on structured and unstructured information will total $ 2.5 billion per year. The market for artificial intelligence in health and life sciences, according to Frost & Sullivan estimates, will also grow at 40% per year, reaching $ 6.6 billion in 2021.

A bit of history

Artificial intelligence has a long history based on Turing's theoretical work on cybernetics dating back to the early 20th century. Although conceptual prerequisites appeared even earlier, with the philosophical works of Rene Descartes, "Discourse on the Method" (1637) and Thomas Hobbes, "Human Nature" (1640).

In the 1830s, English mathematician Charles Babbage came up with the concept of a sophisticated digital calculator, an analytical machine that could calculate the moves for a game of chess. In addition, in 1914, the director of one of the Spanish technical institutes, Leonardo Torres Quevedo, created an electromechanical device capable of playing the simplest chess endgames almost as well as a person.

Since the mid-30s of the last century, since the publication of Turing's works, which discussed the problems of creating devices capable of independently solving various complex problems, the problem of artificial intelligence has attracted the attention of the global scientific community. Turing proposed to consider a machine as an intellectual one if a tester cannot distinguish it from a human during communication. At the same time, the Baby Machine concept appeared, which implies teaching artificial intelligence as a small child, rather than creating an "intelligent adult" robot at once. It was the prototype of what we now call machine learning.

In 1954, Newell, an American researcher decided to write a program for playing chess. Analysts from the RAND Corporation were involved in the work. The method proposed by Shannon, the founder of information theory, was used as the theoretical basis of the program, and its precise formalization was carried out by Turing.

Mathematician John McCarthy at the Stanford Artificial Intelligence Lab

In the summer of 1956, the first working conference was held at Dartmouth University in the United States with the participation of such scientists as McCarthy, Minsky, Shannon, Turing, and others, who were later named the founders of artificial intelligence. For 6 weeks, scientists discussed the possibilities of implementing projects in the field of artificial intelligence. It was then that the term Artificial Intelligence (AI) itself appeared. More information about this event, which became the starting point of AI, is described here (in Russian) https://meduza.io/feature/2017/07/01/pomogat-lyudyam-ili-zamenyat-ih-kak-v-amerike-1950-h-sozdavali- iskusstvennyy-intellekt.

It should be clarified that AI research has not always been successful, and not always have its authors achieved a triumph. After the explosive interest of investors, technologists, and scientists in the 50s of the XX century and the fantastic expectations that the computer was about to replace the human brain, in the 60s and 70s there was a severe disappointment. The capabilities of computers at that time did not allow complex calculations. The scientific thought on the development of the mathematical apparatus of AI has also reached a dead end. Echoes of this pessimism can be found in many applied computer science textbooks published to date. In public culture and even government regulations, the image of a robot or a cybernetic algorithm as a pathetic, unworthy agent, which has to be fully controlled by a human, has been formed.

However, since the mid-90s. the interest in AI has returned, and technology began to develop at a rapid pace. Since that time, there has been a burst of research and patent work on this topic.

AI development today

The first examples of inspiring and impressive results from the application of developments in the field of artificial intelligence have been achieved in activities that require consideration of a large number of frequently changing factors and flexible adaptive human response, for example, in entertainment and games.

Interest to the ability to create a "smart machine", comparable in its intelligence capabilities to a human, has been steadily growing since 1997 when the IBM Deep Blue supercomputer defeated the world chess champion, Garry Kasparov. Read more about this event here: http://mashable.com/2016/02/10/kasparov-deep-blue/

Deep Blue Supercomputer Defeats World Chess Champion Garry Kasparov

In 2005-2008, there was a quantum leap in AI work. The mathematical community has found new theories and models for learning multilayer neural networks, which have become the foundation for the development of the theory of deep machine learning. In addition, the IT industry began to produce high-performance, and most importantly, inexpensive and affordable computing systems. The result of the joint efforts of mathematicians and engineers has been the achievement of outstanding success over the past 10 years, and practical results in AI projects were extensive.

In 2011, the IBM Watson cognitive learning system defeated the unchallenged champions in the Jeopardy! game.

In early 2016, Google's AlphaGo program beat the European Champion in Go, Fan Hui. Two months later, AlphaGo defeated Li Sedol, one of the best Go players in the world, 4-1. With this event, AI took one of the historical frontiers. Before that, it was believed that the computer could not beat a player of this level: the level of abstraction was too high and there were too many scenarios for the development of events to brute force. In a sense, a computer in a Go game needs to be able to think creatively.

In January 2017, the Carnegie Mellon University Libratus program won the 20-day "Brains Vs. Artificial Intelligence: Upping the Ante", a poker tournament, winning more than $ 1.7 million. The next victory was achieved by an improved version of AI called Lengpudashi, against the competitor of the World Series of Poker (WSOP) Alan Du, as well as a number of scientists and engineers. Moreover, the peculiarity of this situation was that the player planned to defeat the AI using its weaknesses. However, the strategy did not work, and the advanced version of Libratus was a winner. According to Blomberg, Libratus co-developer Noam Brown said that humans underestimate artificial intelligence: “People think bluff is human, but it’s not. The computer can figure out that if you bluff, you can win more.”

Over the past few years, AI-based solutions have been implemented in many areas of activity, having achieved increased efficiency of processes, and not only in the entertainment sector. Technological giants such as Facebook, Google, Amazon, Apple, Microsoft, Baidu and a number of other companies are investing huge amounts of money in AI research and are already applying various developments in their practice. In May 2017, Microsoft announced that it plans to use AI mechanisms in each of its software products and make them available to every developer.

Reducing the cost of AI platforms and increasing their availability allowed not only large corporations but specialized companies and even startups to work with them as well. In the last couple of years, many small research teams, consisting of several people and not having huge financial resources, have appeared. They nevertheless manage to come up with new promising ideas and working solutions based on AI. One of the most famous examples is a startup that created Prisma, a very popular mobile app: a development team created a service for processing photos with stylized images of a particular artist.

The massive development and implementation of AI in many directions at once became possible due to several key factors in the development of the IT industry: the penetration of high-speed Internet, a significant increase in the performance and availability of modern computers with a simultaneous decrease in the cost of having one, the development of cloud solutions and mobile technologies, the growth of the free software market.

The most susceptible to the use of AI are the industries of mass and distributed consumer services, such as advertising, marketing, retail, telecom, government services, insurance, banking and fintech. A wave of changes has reached such conservative spheres of activity as education and healthcare.

What is artificial intelligence?

In the early 1980s, computing scientists Barr and Feigenbaum proposed the following definition of AI: “artificial intelligence is part of computer science concerned with designing intelligent computer systems, that is, systems that exhibit the characteristics we associate with intelligence in human behavior such as understanding language, learning, reasoning, solving problems and so on”.

Jeff Bezos, CEO of Amazon, writes about AI: “Over the past decades computers have broadly automated tasks that programmers could describe with clear rules and algorithms. Modern machine learning techniques now allow us to do the same for tasks where describing the precise rules is much harder."

In fact, today artificial intelligence includes various software systems and the methods and algorithms used in them, and their main feature is the ability to solve intellectual problems in the same way as a human. Among the most popular areas of AI application are forecasting, evaluating any digital information with an attempt to give a conclusion on it as well as searching for hidden patterns in data (data mining).

We emphasize that now the computer is not capable of simulating complex processes of human higher nervous activity, such as the manifestation of emotions, love, and creativity. This refers to the area of the so-called artificial general intelligence (AGI), where a breakthrough is expected no earlier than 2030-2050.

At the same time, the computer successfully solves the problems of “weak AI”, acting as a cybernetic automaton, working according to the rules prescribed by a person. The number of successfully implemented projects of the so-called “average AI” is growing. These terms refer to projects where the IT system has elements of adaptive self-learning, improves as the primary data accumulates, reclassifies text, graphics, photo/video, audio data, etc. in a new way.

Neural networks and machine learning: the basic concepts of AI

To date, a variety of approaches and mathematical algorithms for building AI systems have been accumulated and systematized, such as Bayesian methods, logistic regression, support vector machines, decision trees, ensembles of algorithms, etc.

Recently, a number of experts have concluded that the majority of modern successful implementations are solutions built on technologies of deep neural networks and deep learning.

Neural networks are based on an attempt to recreate a primitive model of nervous systems in biological organisms. In living things, a neuron is an electrically excitable cell that processes, stores and transmits information using electrical and chemical signals through synaptic connections. The neuron has a complex structure and narrow specialization. By connecting to each other to transmit signals through synapses, neurons create biological neural networks. The human brain has an average of about 65 billion neurons and 100 trillion synapses. In fact, this is the basic mechanism of learning and brain activity of all living beings, i.e. their intelligence. For example, in Pavlov's classic experiment, a bell rang each time just before feeding the dog, and the dog quickly learned to associate the bell with food. From a physiological point of view, the result of the experiment was the establishment of synaptic connections between the regions of the cerebral cortex responsible for hearing and the regions responsible for controlling the salivary glands. As a result, when the cortex was excited by the sound of a bell, the dog began to salivate. This is how the dog learned to react to signals (data) coming from the outside world and come to the right conclusion.

Artificial neuron

The ability of biological nervous systems to learn and correct their mistakes formed the basis of research in the field of artificial intelligence. The initial task was to try to artificially reproduce the low-level structure of the brain, i.e. create an artificial brain. As a result, the concept of an artificial neuron was proposed: a mathematical function that converts several input facts into one output, assigning influence weights to them. Each artificial neuron can take the weighted sum of the input signals and, if the total input exceeds a certain threshold level, transmit the binary signal further.

Artificial neurons are combined into networks, connecting the outputs of some neurons with the inputs of others. Artificial neurons, being connected and interacting with each other create an artificial neural network, a specific mathematical model that can be implemented in software or hardware. To put it quite simply, a neural network is just a black box program that receives data as input and outputs responses. Built from a very large number of simple elements, a neural network is capable of solving extremely complex problems.

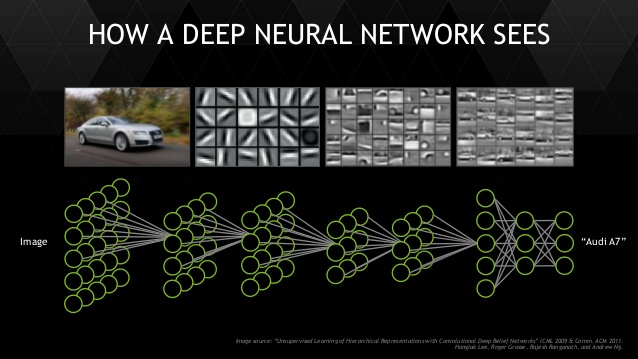

The principle of a neural network operation on the example of the problem of recognizing a car brand in an image, source https://wccftech.com/article/nvidia-demo-skynet-gtc-2014-neural-net-based-machine-learning-intelligence/

The mathematical model of a single neuron (perceptron) was first proposed in 1943 by American neurophysiologists and mathematicians Warren McCulloch and Walter Pitts, who also proposed the definition of an artificial neural network. Frank Rosenblatt simulated the model using a computer in 1957. We can say that neural networks are one of the oldest ideas for the practical implementation of AI.

Currently, there are many models for the implementation of neural networks. There are classic single-layer neural networks, they are used to solve simple problems. A single-layer neural network is mathematically identical to an ordinary polynomial, a weighting function traditionally used in expert models. The number of variables in the polynomial is equal to the number of network inputs, and the coefficients in front of the variables are equal to the weight coefficients of the synapses.

There are mathematical models in which the output of one neural network is sent to the input of another, and cascades of connections are created: the so-called multilayer neural network (MNN) and one of its most powerful versions, convolutional neural network (CNN).

MNNs have great computing power, but they also require huge computing resources. Taking into account the placement of IT systems in the cloud, multilayer neural networks have become available to a larger number of users and now they have become the foundation of modern AI solutions. In 2016, the US-based cognitive computing technology company Digital Reasoning built and trained a neural network of 160 billion digital neurons. This is significantly more powerful than the neural networks at the disposal of Google (11.2 billion neurons) and the US National Laboratory in Livermore (15 billion neurons).

Other interesting kinds of neural networks are recurrent neural networks (RNNs) when the output from the network layer is given back to one of the inputs. Such platforms have a "memory effect" and they are able to track the dynamics of changes in input factors. A simple example is a smile. A person begins to smile with barely noticeable movements of the facial muscles of the eyes and face, before clearly showing their emotions. RNN allows detecting such movement at an early stage, which is useful for predicting the behavior of a living object in time by analyzing a series of images or constructing a sequential stream of speech in natural language.

Machine learning is the process of machine analysis of prepared statistical data to find patterns and create the necessary algorithms on their basis (adjusting neural network parameters), which will then be used for predictions.

The algorithms created at the stage of machine learning will allow computer artificial intelligence to draw correct conclusions based on the data provided to it.

There are 3 main approaches to machine learning:

- supervised learning

- reinforcement learning

- unsupervised learning

In supervised learning, specially selected data is used, in which the correct answers are already known and determined, and the parameters of the neural network are adjusted to minimize the error. In this way, the AI can match the correct answers to each input example and identify possible dependencies of the answer on the input data. For example, a collection of X-ray images with the indicated conclusions will be the basis for teaching AI, its supervisor. From a series of obtained models, a person eventually chooses the most suitable one, for example, according to the maximum accuracy of the forecasts.

Often, the preparation of such data and retrospective responses requires a lot of human intervention and manual selection. In addition, the quality of the result obtained is influenced by the subjectivity of a human expert. If for some reason, they do not consider the entire set of the sample and its attributes during training, their conceptual model is limited by the current level of development of science and technology, and the solution obtained by the AI will have this blind spot as well. It is important to note that neural networks are a function with nonlinear transformations and are hyper-specific: the result of the AI algorithm will be unpredictable if parameters that go beyond the boundaries of the training sample are supplied to the input. Therefore, it is important to train the AI system using examples and frequencies that are adequate for subsequent real-life operating conditions. The geographic and socio-demographic aspect is strongly influenced, which, in the general case, does not allow the use of mathematical models trained on population data from other countries and regions without loss of accuracy. The expert is also responsible for the representativeness of the training sample.

Unsupervised learning is used where there are no pre-prepared answers and classification algorithms. In this case, AI focuses on the independent identification of hidden dependencies and the search for ontology. Machine learning allows you to categorize samples by analyzing hidden patterns and "auto-recovering" the internal structure and nature of the information. This allows excluding the situation of systemic inattentiveness of a doctor or researcher. Let's say in a situation where they are developing an AI predictive model for type 2 diabetes, focusing primarily on blood glucose or patient weight. However, at the same time, they are forced to ignore all other information from medical history, which could also be useful. An in-depth approach to learning enables AI to be trained across a multi-million patient base and to analyze any test that has ever been recorded on a patient's electronic health record.

Deep learning mechanisms usually use multilayer neural networks and a very large number of object instances to train a neural network. The number of records in the training set should be hundreds of thousands or even millions of examples, when resources are not limited, even more. In order to teach AI to recognize the face of a person in a photo, the development team at Facebook required millions of images with metadata and tags that indicate the presence of a face in the photo. Facebook's success in implementing the face recognition function was just in the huge amount of initial information for training: there are accounts of hundreds of millions of people on the social network who posted a huge number of photos and at the same time pointed at the faces and marked (identified) people. Deep machine learning based on such a large amount of data has made it possible to create a reliable artificial intelligence, which now, in a matter of milliseconds, not only detects a person's face in an image but also quite often guesses who exactly is depicted in a photograph.

AI needs a large number of training set records to create the necessary classification rules. The more heterogeneous data is loaded into the system at the stage of machine learning, the more accurately these rules will be identified, and the more accurate the result of the AI will ultimately be. For example, when processing radiographs and MRI, multilayer neural networks are able to form an idea of the human anatomy and its organs from images. At the same time, computers cannot come up with names of organs similar to classical medical terminology in their computer classification. Therefore, at first, they need a translator from the internal machine dictionary to professional vocabulary.

It should also be taken into account that due to nonlinearity, multilayer neural networks do not have an "inverse function"; a computer, in general, will not be able to explain to a person why he came to this conclusion. To prepare a motivated judgment, you need a human expert or another neural network trained for the tasks of writing correct transcripts and conclusions in natural human language.

The supervised learning method is more convenient and preferable in situations where there is accumulated and reliable retrospective initial data: learning based on them will require less time and will allow you to get a working AI solution faster. Where there is no opportunity to get a database with labelled information, it is necessary to apply self-learning methods based on deep machine learning, such solutions will not need human supervision.

Researchers and startups who are just beginning to work with AI and looking for opportunities for its application in healthcare may start with supervised machine learning methods. This will require fewer costs (in terms of time and money) to create a prototype of a working system and practical development of AI techniques. In this case, a functioning AI system for a specific task can be obtained faster. Currently, there are a large number of quality code libraries for artificial neural networks on the market, such as TensorFlow https://www.tensorflow.org/ for mathematical modelling, OpenCV http://opencv.org/ for image recognition tasks, supplied free of charge, under the free software license.

In addition to the practical effect of increased accuracy, which today can reach 95%, AI systems at the time of data processing also have a high speed of operation. Experiments were conducted on pattern recognition from different angles, in which humans and computers competed. While the rate of displaying images was not high: 1-2 frames per minute, the person certainly outperformed the machine. When analyzing pathology images, the human error was no more than 3.5%, and the computer gave a diagnostic error of 7.5%. However, with an increase in the pace to 10 frames per minute and higher, the person's reaction weakened, fatigue set in, which led to a complete failure. The computer, on the other hand, continuously learned from its mistakes and in the next series only increased the accuracy of its work. The mode of paired operation of a person and a computer turned out to be promising, in which it was possible to increase the diagnostic accuracy by 85% at a relatively high speed of displaying images for a person.

Of course, one cannot talk about the effective construction of AI models and their accuracy if there is no necessary domestic digital information for their training. Therefore, it is critically important, even if not to reject traditional paperwork, to duplicate medical records in both paper and digital format and to start accumulating Russian electronic data banks. Moreover, it is crucial to enable them to be used in an impersonal form, without disclosing personal data of patients, to create and improve domestic AI solutions.

The difference between AI creation and conventional software development

The main difference between artificial intelligence methods and conventional programming is that when creating an AI, the programmer does not need to know all the dependencies between the input parameters and the result that should be obtained. Where such dependencies are well known or where there is a reliable mathematical model, for example, the calculation of a statistical report or the formation of a register for payment for medical care, it is hardly worth looking for an application for artificial intelligence as modern software products are still doing better, more reliably and at an acceptable time.

Deep machine learning technology is effective where it is impossible to set clear rules, formulas and algorithms for solving a problem, for example, "is there a pathology on an X-ray image?". This technology assumes that instead of creating software for calculating predefined formulas, the machine is trained using a large amount of data and various methods that enable it to identify this formula based on empirical data and thereby learn how to perform a task in the future. At the same time, the development team is working on data preparation and training, not trying to write a program that will somehow analyze a snapshot according to predefined algorithms and get an answer whether there is an anomaly.

A whole class of information systems built on this paradigm has emerged that have received the designation "IT + DT + AI + IOT", or Digital platforms. IT means the general digitalization of processes and computerization of workplaces, DT refers to the accumulation of data and the use of powerful information processing technologies, and AI means that robotic AI algorithms will be created on the accumulated data that will act both in partnership with a person and independently. The abbreviation “IOT” means “internet of things”: a computing network consisting of physical objects (“things”) equipped with built-in technologies to interact with each other or with the external environment. The creation of digital platforms for healthcare needs is among the strategic priorities for the developed economies of the world, including Russia.

AI risks and concerns

Along with the avalanche-like growth of publications about the prospects of AI and the emergence of more and more new examples of creating IT solutions based on it, the number of statements by experts who are concerned about the consequences that may occur in the coming years and decades after their implementation is also increasing.

The fears of experts are that while artificial intelligence will bring radical efficiency gains in various industries, for ordinary people it will lead to unemployment and uncertainty in their careers, as machines replace their human jobs.

Automation will, "in turn will accelerate the already widening economic inequality around the world," Hawking wrote. "The internet and the platforms that it makes possible to allow very small groups of individuals to make enormous profits while employing very few people. This is inevitable, it is progress, but it is also socially destructive."

Such fears are quite reasonable. For example, the American company Goldman Sachs has already replaced traders who traded stocks on behalf of large bank clients with an automatically working AI-based bot. Now, out of 600 people who worked in 2000, only two have remained. The rest have been replaced by trading robots, which are serviced by 200 engineers. More: http://www.zerohedge.com/news/2017-02-13/goldman-had-600-cash-equity-traders-2000-it-now-has-2

On the Amazon electronic platform, robotic programs are involved in arbitration of mutual claims between buyers and sellers of goods. They process over 60 million claims a year, nearly 3 times the number of all claims filed through the traditional US court system.

The arguments of supporters of a moderate introduction of AI and robots seem reasonable, holding back the pace of their introduction of the so-called "tax on robots". The taxes collected from each new robotic workplace could be used to finance training, retraining and employment programs for redundant employees.

Probably, the same changes should be expected in the healthcare sector. However, for Russia this may be a good thing, taking into account the serious problem of personnel shortage, the huge territory and low population density.

What kind of tasks can be assigned to AI?

Andrew Ng, of the Google Brain team and the Stanford Artificial Intelligence Laboratory, says that the current media sometimes lends unrealistic power to AI technologies. In fact, the real possibilities of using AI are quite limited: modern AI is still capable of giving accurate answers only to simple questions.

Together with a large amount of initial data for training, it is a real and feasible statement of the problem that is the most important condition for the future success or failure of an AI project. So far, AI cannot solve complex problems that are beyond the strength of a doctor, such as creating a fantastic device that independently scans a person and is able to make any diagnosis and prescribe effective treatment. Now AI is more likely to solve simpler problems, for example, to assess whether a foreign body or pathology is present on an X-ray or ultrasound image or to identify cancer cells in the cytological material. However, the steady increase in the accuracy of diagnostics through AI modules attracts attention. The publications have already stated the obtained values of the AI accuracy up to 93% when processing radiological images, MRI, mammograms; up to 93% accuracy when processing prenatal ultrasound; up to 94.5% in the diagnosis of tuberculosis; up to 96.5% in predicting ulcerative incidents.

According to one of the world's gurus Andrew Ng, the real capabilities of AI at this time can be assessed by the following simple rule: “If an ordinary person can complete a mental task in seconds, then we can probably automate it using AI either now or in the near future"

Specific algorithms or even solutions are not as important in the success of AI in medicine. Examples of successful ideas are published openly, and open source software is already available. For example, DeepLearning4j (DL4J) - https://deeplearning4j.org/, Theano - http://deeplearning.net/software/theano/, Torch - http://torch.ch/, Caffe - http://caffe.berkeleyvision.org/ and several others.

An interesting approach to developing AI solutions is crowdsourcing. On Kaggle https://www.kaggle.com/ for 2017, are more than 40 thousand experts, data scientists from all over the world, who solve AI problems posed by commercial and public organizations. The quality of the solutions obtained is sometimes higher than the quality of the developments of commercial companies. Participants from the platform are often motivated not by a cash prize (there may not be one) for successfully solving a problem, but by their professional interest in the task as well as increasing their personal rating as an expert. Crowdsourcing can save money and time for developers and customers new to AI.

In fact, only two aspects are the main barriers to the more widespread use of AI in healthcare: a large amount of data for training and a professional and creative approach to training AI. Without verified and high-quality data, AI will not work; this is the first serious difficulty for its implementation. Without talented people, a simple application of ready-made algorithms to prepared data will not work as well, because AI will need to be adjusted to understand this data to solve a specific applied problem.

Artificial intelligence in healthcare today

Medicine and health care are already considered one of the strategic and promising areas in terms of effective implementation of AI. The use of AI can massively increase the accuracy of diagnostics, make life easier for patients with various diseases, increase the speed of development and release of new drugs, etc.

Perhaps the largest and most talked-about AI project in medicine is the American corporation IBM and its cognitive system IBM Watson. Initially, this solution was taught and then applied in oncology. IBM Watson has been helping to make an accurate diagnosis and find an effective way to cure each of the patients.

To train IBM Watson, 30 billion medical images were analyzed, for which IBM had to buy Merge Healthcare for $ 1 billion. This process required the addition of 50 million anonymous electronic health records, which IBM acquired by purchasing the startup Explorys.

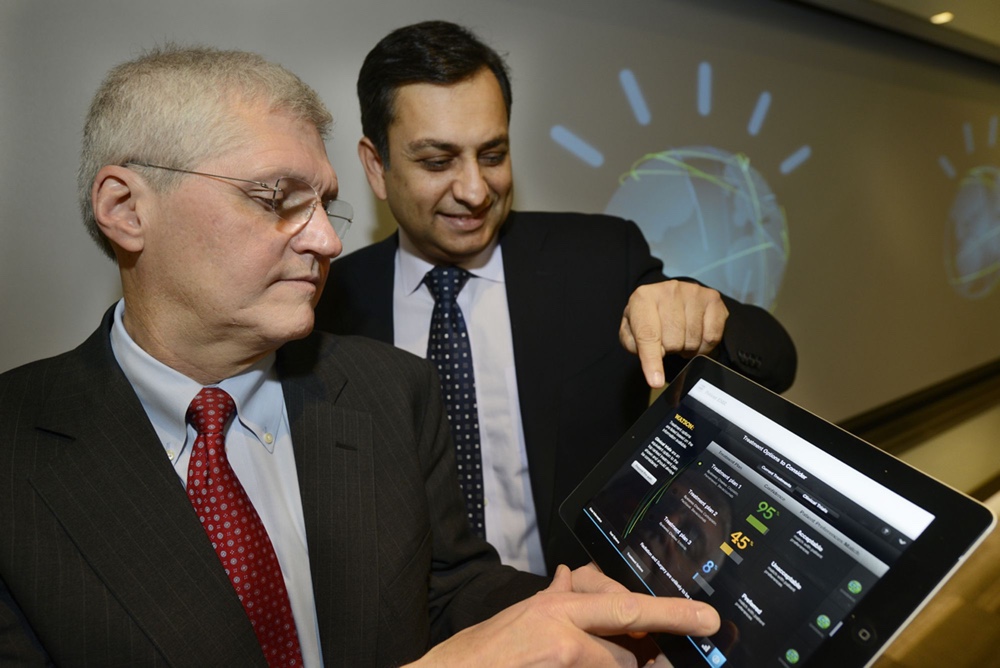

Mark Chris, M.D., Head of Thoracic Oncology, Sloan-Kettering Cancer Memorial Center, left, and Manoah Saxena, General Manager of IBM, Watson Solutions work with the first IBM Watson-based oncology solution in New York on February 11, 2013. Source http://fortune.com/ibm-watson-health-business-strategy/

In 2014, IBM announced a partnership with Johnson & Johnson and the pharmaceutical company Sanofi to work to educate Watson on how to understand research and clinical trials. According to company representatives, this will significantly reduce the time for clinical trials of new drugs, and doctors will be able to give drugs that are most suitable for a particular patient. In 2014, IBM announced the development of Avicenna software capable of interpreting both text and images. Separate algorithms are used for each data type. Therefore, in the end, Avicenna will be able to understand medical images and records and will act as an assistant to the radiologist. Another IBM project, Medical Sieve, is working on a similar problem. In this case, we are talking about the development of artificial intelligence, a medical assistant, which will be able to analyze hundreds of images for deviations from the norm. This will help radiologists and cardiologists tackle the issues in which artificial intelligence is still powerless.

IBM recently collaborated with the American Heart Association to expand the capabilities of Watson to offer system assistance to cardiologists. As conceived by the authors of the project, the cognitive cloud platform within the framework of this project will analyze a huge amount of medical data related to a particular patient. This data includes ultrasound images, X-rays and all other graphical information that can help clarify a person's diagnosis. Early on, Watson's capabilities will be used to look for signs of aortic valve stenosis. With stenosis, the opening of the aorta is narrowed due to the fusion of the leaflets of its valve, which interferes with the normal flow of blood from the left ventricle to the aorta. The problem is that it is not so easy to identify valve stenosis, despite the fact that it is a very common heart defect in adults (70-85% of cases among all defects). Watson will try to determine pathologies on medical images: stenosis, tumor, infection, or simply an anatomical anomaly to give an appropriate assessment to the attending physician to speed up and improve the quality of their work.

Physicians, who specialize in rare childhood diseases at Boston Children's Hospital are using IBM Watson to make more accurate diagnoses: artificial intelligence searches for necessary information in clinical databases and scientific journals, which are stored in the Watson Health Cloud, http://www.healthcareitnews.com/news/boston-childrens-ibm-watson-take-rare-diseases.

Christopher Walsh, MD, PhD, Chief, Division of Genetics at Boston Children's Hospital, works with Watson for Genomics: https://www.cbsnews.com/news/ibm-watson-boston-childrens-combat-rare-pediatric- diseases/

It should be noted that the Watson project, like any innovative product, did not set explicit economic goals for the creators. The costs of the stages of creating its components were usually higher than planned, and its content is very burdensome when compared with traditional budgets in health care. Rather, it can be viewed as a kind of testing ground where promising IT technologies can be tested and researchers can be inspired. Then, already proven and tested prototypes should be transferred to mass production, achieving higher price-quality indicators and suitability for service in real conditions. At almost every conference on AI today, there are reports from researchers from around the world with statements such as "We are making our own Watson, and it will be better than the original."

Using the Emergent artificial intelligence system, the researchers were able to identify five new biomarkers that could be targeted by new drugs in the treatment of glaucoma. According to scientists, for this purpose, information about more than 600 thousand specific DNA sequences of 2.3 thousand patients and data on gene interactions are entered into the AI system.

The DeepMind Health project, which is led by a British company that is part of Google, has created a system that can process hundreds of thousands of medical records in a few minutes and extract the necessary information from them. Although this project, based on data systematization and machine learning, is still in its early stages, DeepMind is already collaborating with Moorefields Eye Hospital (UK) to improve the quality of treatment. Using a million anonymized, tomographic eye images, the researchers are trying to create algorithms based on machine learning technologies that would help detect the early signs of two eye diseases: wet age-related macular degeneration and diabetic retinopathy. Another company, part of Google, Verily, is doing the same. The specialists of this company use artificial intelligence and algorithms of the Google search engine in order to analyze what makes a person healthy.

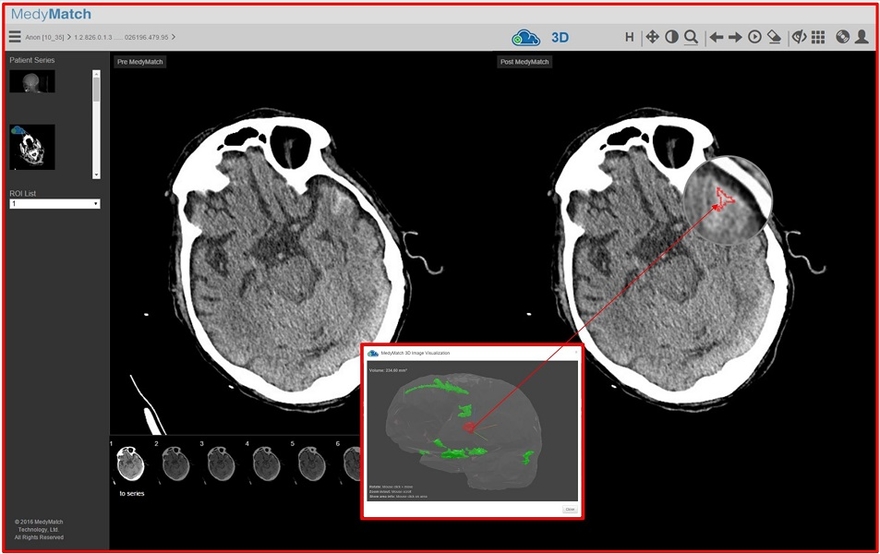

The Israeli MedyMatch Technology company, which employs only 20 people, has developed a solution based on AI and Big Data, thanks to which doctors can more accurately diagnose a stroke. To do this, in real time, the MedyMatch system compares the image of the patient's brain with hundreds of thousands of other images that are in its "cloud". It is known that stroke can be caused by two reasons: cerebral hemorrhage and blood clot. Accordingly, each of these cases requires a different approach to treatment. However, according to statistics, despite the improvement in CT, the number of errors in diagnosis has not changed over the past 30 years and is approximately 30%. That is, in almost every third case, the doctor prescribes the wrong treatment for the patient, which leads to sad consequences. The MedyMatch system is able to track the smallest deviations from the norm that a specialist is not always able to notice, thus reducing the likelihood of errors in diagnosis and treatment prescription to a minimum.

AI-powered MedyMatch tool helps ambulance teams quickly confirm and treat bleeding in the brain and treat appropriately; source FierceBioTech.

Recently, more and more attention is paid to attempts to apply AI technologies not only to create solutions for doctors but also for patients. For example, the mobile application of the British company Your.MD, which was launched in November 2015. This program uses AI, machine learning and natural language processing technologies. This allows the patient to say, for example, “I have a headache,” and then receive follow-up expert advice from a smartphone. To do this, the artificial intelligence system Your.MD is connected to the world's largest map of symptoms, created by the same Your.MD: it took into account 1.4 million symptoms, the identification of which took more than 350 thousand hours. A British health care professional has tested each symptom. Artificial Intelligence selects the most appropriate symptom based on the unique profile of the smartphone owner.

Medtronic offers an app that can predict a critical drop in blood sugar three hours before an event. To do this, Medtronic and IBM are using cognitive analytics technologies for the data of blood glucose meters and insulin pumps. The app will help people understand the impact of daily activity on diabetes. Another exciting IBM project, this time in collaboration with the diagnostic company Pathway Genomics, has created the OME app, which combines cognitive and precision medicine with genetics. The purpose of the app is to provide users with personalized information to enhance their quality of life. The first version of the app includes recommendations for diet and exercise, information on metabolism, which depends on the user's genetic data, a map with the user's habits and information about his health. In the future, electronic health records, insurance information and other additional information will be added.

In addition to direct clinical use, AI elements can also be used in auxiliary processes of a medical organization. For example, it would be appropriate to use AI in the automatic diagnosis of the quality of the medical information system, in matters of information security. AI systems can help with giving recommendations on the timely adjustment of reference books, tariffs, or even notice the anomalous behavior of an employee and recommend to his manager to send him for training if there were suspicions of his low professionalism and slow response.

Review of the most promising areas of development

Summing up the above, we believe that in the near future the following tasks will be automated using AI in healthcare:

1. Automated diagnostic methods, for example, analysis of X-ray or MRI images for the automatic detection of pathology, microscopic analysis of biological material, automatic ECG coding, electroencephalograms, etc. Storing a large number of decrypted results of a diagnostic examination in electronic form, when there is not only the data itself but also a formalized conclusion on them, allows you to create truly reliable and valuable software products that can, if not replace doctors, then provide them with effective assistance, for example, independently identify and pay attention to routine pathology, reduce the time and cost of the examination, introduce outsourcing and remote diagnostics.

2. Speech recognition and natural language understanding systems can be of great help to both the doctor and the patient. It includes things from the ordinary speech transcript and turning it into text as a more advanced interface for communicating with medical information systems (MIS), contacting the Call Center or a voice assistant and to the ideas such as automatic language translator, speech synthesis when reading records from the MIS, a robot-recorder in the admission department of a hospital or a clinic's reception, capable of answering simple questions and routing patients, etc.

3. Systems for analyzing big data and predicting events are solvable problems for AI now, which can have a significant effect. For example, on-line analysis of changes in morbidity makes it possible to quickly predict changes in patient turnover to medical organizations or the need for drugs, to prevent an epidemic or to give an accurate forecast of deterioration in health, which can sometimes save a patient's life.

4. Systems for automatic classification and verification of information help to link patient information in various forms in various information systems. For example, build an integrated electronic health record from individual episodes, described in different detail and without clear or contradictory structuring of information. A promising technology is the technology of machine analysis of the content of social networks, Internet portals in order to obtain sociological, demographic, marketing information about the quality of the health care system and individual medical institutions.

5. Automatic chatbots for patient support can be of significant help in increasing patient adherence to healthy lifestyles and prescribed treatment. Already, chat bots can learn how to answer routine questions, suggest the tactics of patients' behavior in simple situations, connect the patient with the right doctor in telemedicine, give dietary recommendations, etc. This development of healthcare towards self-care and greater involvement of patients in protecting their own health without a visit to the doctor can save significant financial resources.

6. Development of robotics and mechatronics. The well-known robot surgeon Da Vinci is just the first step towards, if not replacing a doctor with a machine, then at least improving the quality of work of healthcare professionals. The integration of robotics with AI is now considered as one of the promising areas of development, capable of transferring routine manipulations to machines, including in medicine.

Of course, when it comes to human health, the principle of “do no harm” is important, which must be accompanied by the rigidity of the regulatory framework and a thorough evidence base when introducing new technologies. At the same time, is it worth in advance to treat new technologies with skepticism and denial of their possible future practical application, ignoring obvious successes?

Regardless of which part of AI solutions in healthcare will be successfully implemented and which part will be reasonably rejected, it should be recognized that in the 21st century AI as technology will have the greatest transformative impact.